IPTC-Amazon Collaboration

IPTC and Amazon have launched a joint training initiative for master students in applications of machine and deep learning, specifically in technologies to extract and combine self-supervised representations for multimedia processing. These technologies have a great potential in many areas such as content generation (audio, image, video, or sign language representation), classification, labelling or search.

For 2022-2023, the training plan is:

1.- Initiative presentation & recruiting. The initiative, research topic and recruiting procedure will be introduced to the students enrolled in UPM ETSIT Master courses.

2.- Study & training. Five selected students will join the initiative and, for some months, will start to gain knowledge and develop their skills in the area or work.

3.- Applications development. In parallel with the study & training stage, students will initiate the development of several applications, such as:

- Sign language motion generation from high level sign characteristics

- Speaker diarization with multimodal inputs

- Pose and spatial movement as input for dynamic content search & generation

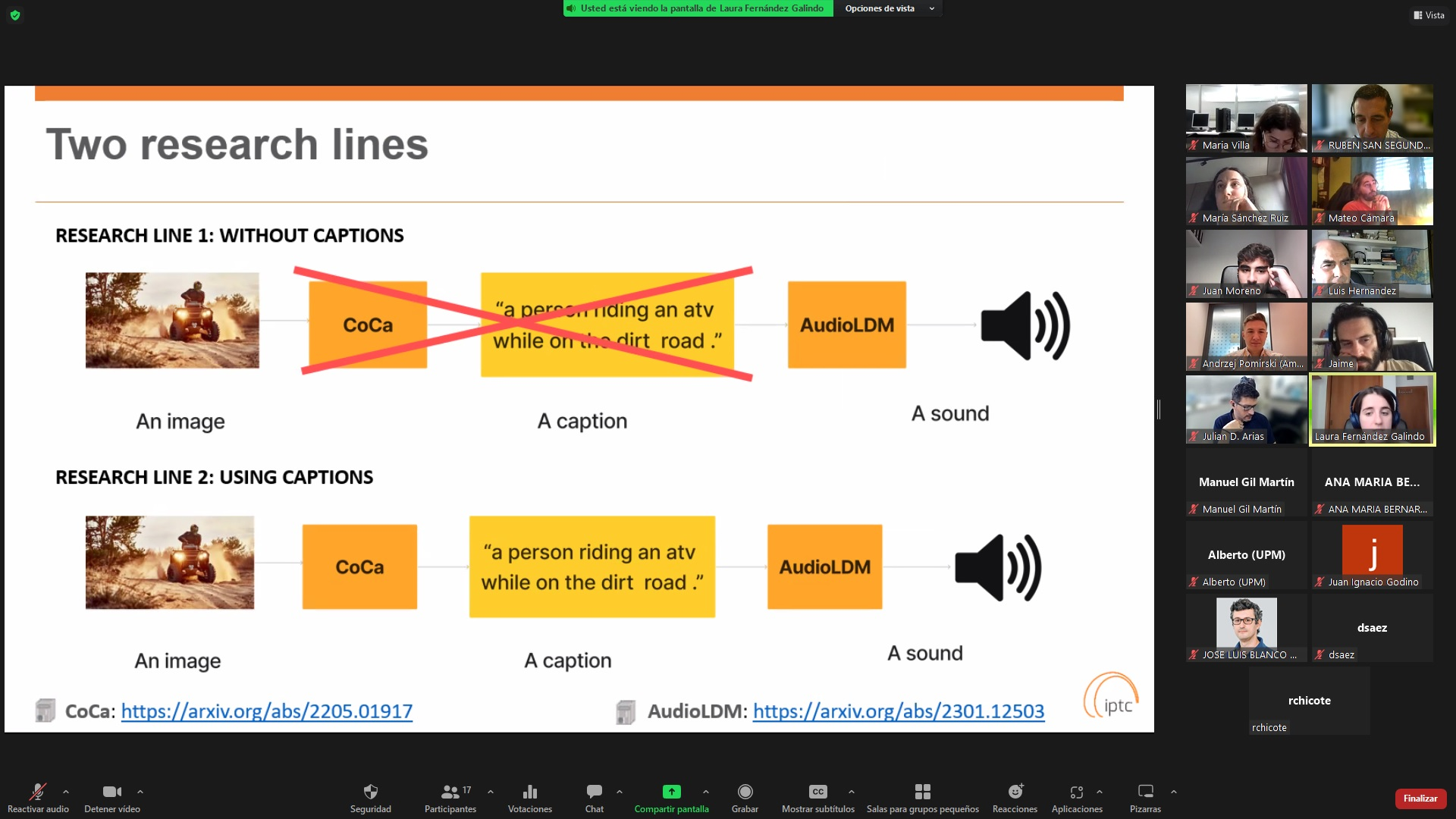

- Entangling AI-audio synthesis models and multimodal representations

- Zero-shot sonorizing of video sequences

These applications might change depending on the state-of-the-art evolution and the availability of the necessary resources.

Students will be supervised along all the process by both IPTC professors and AMAZON researchers.

Detailed description of activities

UPM DRIVE link for sharing information

| Slides of the Kick-off meeting (Fist GM), Dec 1st, 2022 | |

|

Slides of the Second General Meeting, Jan 23rd, 2023 |

|

Slides of the Third General Meeting, April 13rd, 2023 |

|

Andrzej visits IPTC, April 28th, 2023 |

|

Slides of the Fourth Technical Meeting, June 15th, 2023 |

|

Slides of the Final Technical Meeting (Part I, Part II), Nov. 15th, 2023 |

Share this:

Latest news

Categories